MemoryOS

MemoryOS

Welcome to MemoryOS

MemoryOS is a memory operating system for personalized AI agents, enabling more coherent, personalized, and context-aware interactions. It adopts a hierarchical storage architecture with four core modules: Storage, Updating, Retrieval, and Generation, to achieve comprehensive and efficient memory management.

Key Features:

- 🏆 Top Performance: SOTA results in long-term memory benchmarks, boosting F1 by 49.11% and BLEU-1 by 46.18%.

- 🧠 Plug-and-Play Memory Management: Seamless integration of storage engines, update strategies, and retrieval algorithms.

- 🔧 Rich Toolset: Core tools include

add_memory,retrieve_memory,get_user_profile. - 🌐 Universal LLM Support: Compatible with OpenAI, Deepseek, Qwen, and more.

- 📦 Multi-level Memory: Short, mid, and long-term memory with automated user profile and knowledge updating.

- ⚡ Efficient Parallelism: Parallel memory retrieval and model inference for lower latency.

- 🖥️ Visualization Platform: Web-based memory analytics coming soon.

Get Started

- MemoryOS_PYPI Getting Started

- Prerequisites

- Python >= 3.10

- Installation

conda create -n MemoryOS python=3.10 conda activate MemoryOS git clone https://github.com/BAI-LAB/MemoryOS.git cd MemoryOS/memoryos-pypi pip install -r requirements.txt - Basic Usage

import os from memoryos import Memoryos # --- Basic Configuration --- USER_ID = "demo_user" ASSISTANT_ID = "demo_assistant" API_KEY = "YOUR_OPENAI_API_KEY" # Replace with your key BASE_URL = "" # Optional: if using a custom OpenAI endpoint DATA_STORAGE_PATH = "./simple_demo_data" LLM_MODEL = "gpt-4o-mini" def simple_demo(): print("MemoryOS Simple Demo") # 1. Initialize MemoryOS print("Initializing MemoryOS...") try: memo = Memoryos( user_id=USER_ID, openai_api_key=API_KEY, openai_base_url=BASE_URL, data_storage_path=DATA_STORAGE_PATH, llm_model=LLM_MODEL, assistant_id=ASSISTANT_ID, short_term_capacity=7, mid_term_heat_threshold=5, retrieval_queue_capacity=7, long_term_knowledge_capacity=100 ) print("MemoryOS initialized successfully!\n") except Exception as e: print(f"Error: {e}") return # 2. Add some basic memories print("Adding some memories...") memo.add_memory( user_input="Hi! I'm Tom, I work as a data scientist in San Francisco.", agent_response="Hello Tom! Nice to meet you. Data science is such an exciting field. What kind of data do you work with?" ) test_query = "What do you remember about my job?" print(f"User: {test_query}") response = memo.get_response( query=test_query, ) print(f"Assistant: {response}") if __name__ == "__main__": simple_demo() - MemoryOS-MCP Getting Started

- 🔧 Core Tools

add_memory

Saves the content of the conversation between the user and the AI assistant into the memory system, for the purpose of building a persistent dialogue history and contextual record.retrieve_memory

Retrieves related historical dialogues, user preferences, and knowledge information from the memory system based on a query, helping the AI assistant understand the user’s needs and background.get_user_profile

Obtains a user profile generated from the analysis of historical dialogues, including the user’s personality traits, interest preferences, and relevant knowledge background.

- 1. Install dependencies

cd memoryos-mcp pip install -r requirements.txt - 2. Configuration

Editconfig.json:{ "user_id": "user ID", "openai_api_key": "OpenAI API key", "openai_base_url": "https://api.openai.com/v1", "data_storage_path": "./memoryos_data", "assistant_id": "assistant_id", "llm_model": "gpt-4o-mini" } - 3. Start the server

python server_new.py --config config.json - 4. Test

python test_comprehensive.py - 5. Configure it on Cline and other clients

Copy themcp.jsonfile over, and make sure the file path is correct.

# This should be changed to the Python interpreter of your virtual environment."command": "/root/miniconda3/envs/memos/bin/python" - Docker Deployment

- Option 1: Pull the Official Image

You can run MemoryOS using Docker in two ways: by pulling the official image or by building your own image from the Dockerfile. Both methods are suitable for quick setup, testing, and production deployment.

# Pull the latest official image

docker pull ghcr.io/bai-lab/memoryos:latest

docker run -it --gpus=all ghcr.io/bai-lab/memoryos /bin/bash- Option 2: Build from Dockerfile

# Clone the repository

git clone https://github.com/BAI-LAB/MemoryOS.git

cd MemoryOS

# Build the Docker image (make sure Dockerfile is present)

docker build -t memoryos .

docker run -it --gpus=all memoryos /bin/bash1. Install dependencies

cd memoryos-chromadb

pip install -r requirements.txt2. Test

The edit information is in comprehensive_test.py

memoryos = Memoryos(

user_id='travel_user_test',

openai_api_key='',

openai_base_url='',

data_storage_path='./comprehensive_test_data',

assistant_id='travel_assistant',

embedding_model_name='BAAI/bge-m3',

mid_term_capacity=1000,

mid_term_heat_threshold=13.0,

mid_term_similarity_threshold=0.7,

short_term_capacity=2

)

python3 comprehensive_test.py

# Make sure to use a different data storage path when switching embedding models.

📋 Complete List of 13 Parameters

| # | Parameter Name | Type | Default Value | Description |

|---|---|---|---|---|

| 1 | user_id |

str | Required | User ID identifier |

| 2 | openai_api_key |

str | Required | OpenAI API key |

| 3 | data_storage_path |

str | Required | Data storage path |

| 4 | openai_base_url |

str | None |

API base URL |

| 5 | assistant_id |

str | "default_assistant_profile" |

Assistant ID |

| 6 | short_term_capacity |

int | 10 |

Short-term memory capacity |

| 7 | mid_term_capacity |

int | 2000 |

Mid-term memory capacity |

| 8 | long_term_knowledge_capacity |

int | 100 |

Long-term knowledge capacity |

| 9 | retrieval_queue_capacity |

int | 7 |

Retrieval queue capacity |

| 10 | mid_term_heat_threshold |

float | 5.0 |

Mid-term memory heat threshold |

| 11 | mid_term_similarity_threshold |

float | 0.6 |

🆕 Mid-term memory similarity threshold |

| 12 | llm_model |

str | "gpt-4o-mini" |

LLM model name |

| 13 | embedding_model_name |

str | "all-MiniLM-L6-v2" |

🆕

Qwen/Qwen3-Embedding-0.6B BAAI/bge-m3 all-MiniLM-L6-v2 |

How It Works

- Initialization:

Memoryosis initialized with user and assistant IDs, API keys, data storage paths, and various capacity/threshold settings. It sets up dedicated storage for each user and assistant. - Adding Memories: User inputs and agent responses are added as QA pairs, initially stored in short-term memory.

- Short-Term to Mid-Term Processing: When short-term memory is full, the

Updatermodule processes these interactions, consolidating them into meaningful segments and storing them in mid-term memory. - Mid-Term Analysis & LPM Updates: Mid-term memory segments accumulate \"heat\" based on visit frequency and interaction length. When a segment's heat exceeds a threshold, its content is analyzed:

- User profile insights are extracted and used to update the long-term user profile.

- Specific user facts are added to the user's long-term knowledge.

- Relevant information for the assistant is added to the assistant's long-term knowledge base.

- Response Generation: When a user query is received:

- The

Retrievermodule fetches relevant context from short-term history, mid-term memory, user profile & knowledge, and assistant's knowledge base. - This comprehensive context is used, along with the user's query, to generate a coherent and informed response via an LLM.

- The

MemoryOS Playground (Online)

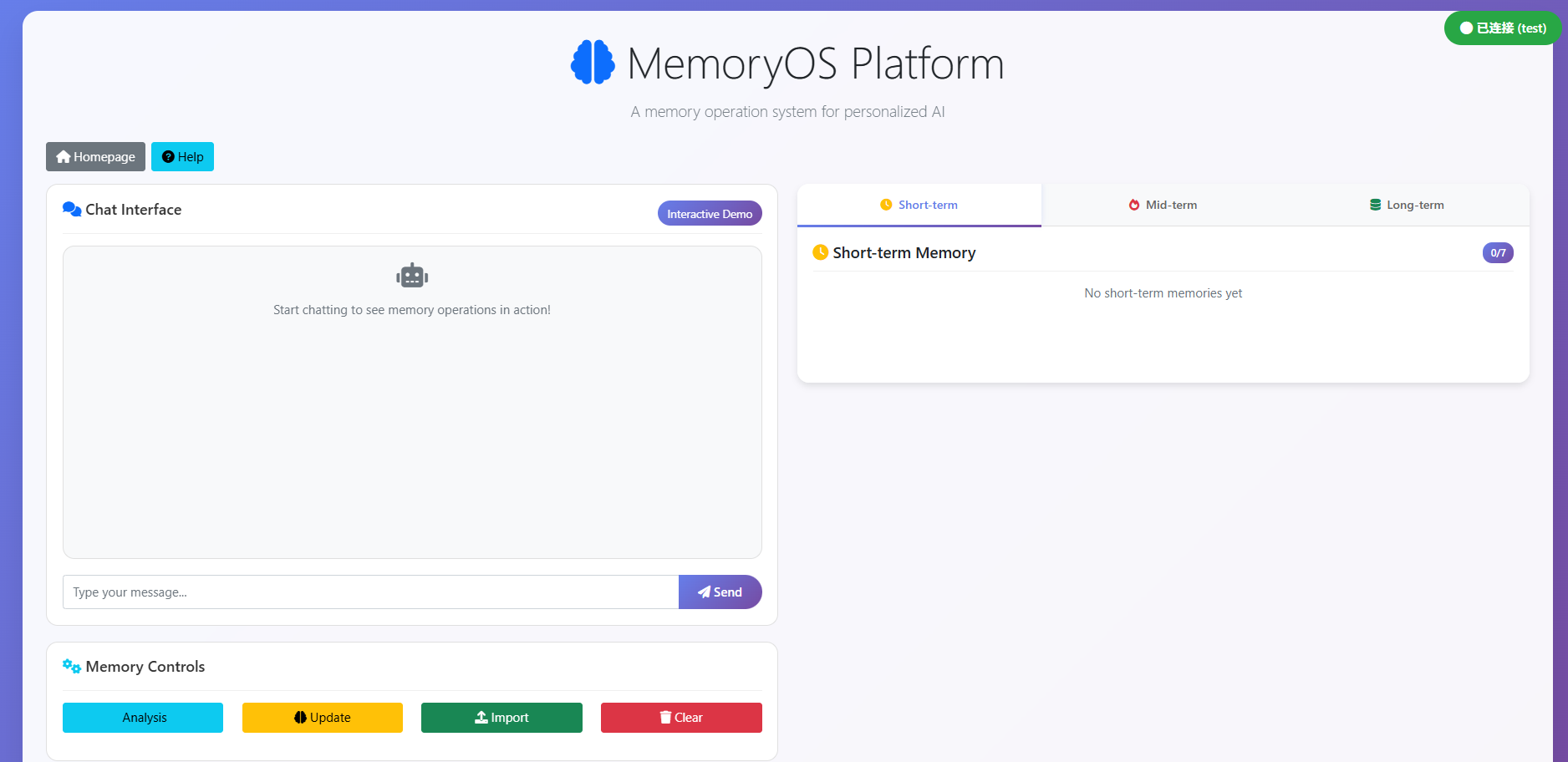

Go to MemoryOS PlaygroundWeb-based memory analytics and management platform for MemoryOS. Coming soon!

MemoryOS Playground (Local)

Open Local Playground RepoLocal run steps:

cd MemoryOS/memoryos-playground/memdemo/

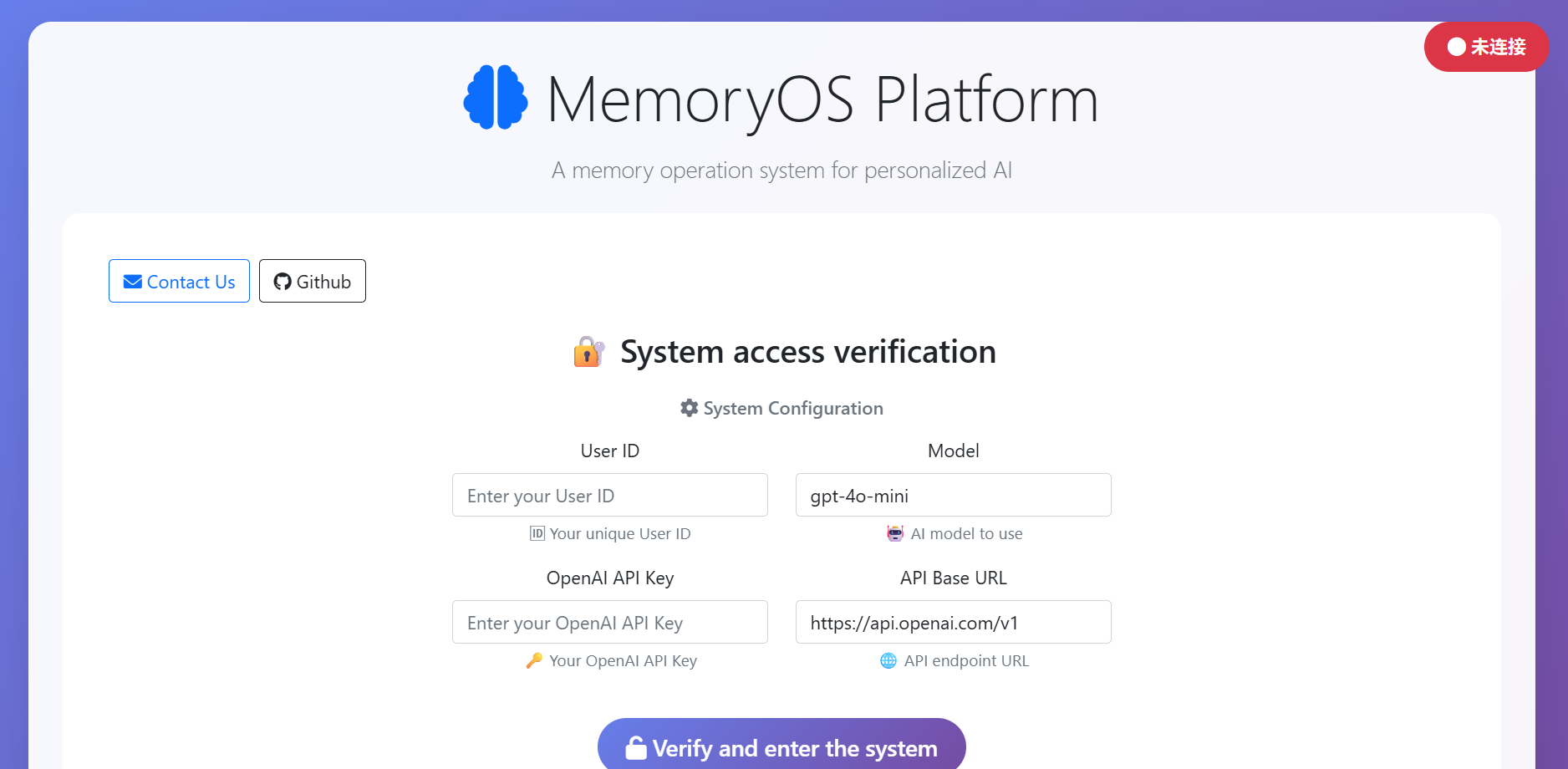

python3 app.py- After launching the main interface, fill in User ID, OpenAI API Key, Model, and API Base URL.

- After entering the system, click the Help button to see what each button does.

- The user's memory is stored under MemoryOS-main/memoryos-playground/memdemo/data

MemoryOS Public API Reference

PyPI

Memoryos

Constructor parameters

Memoryos(

user_id: str,

assistant_id: str,

openai_api_key: str,

openai_base_url: str,

data_storage_path: str,

llm_model: str,

short_term_capacity: int,

mid_term_heat_threshold: float,

retrieval_queue_capacity: int,

long_term_knowledge_capacity: int,

embedding_model_name: str

)

Methods

add_memory(user_input: str, agent_response: str) -> None

Add a user-assistant message pair to the memory system.

get_response(query: str) -> str

Retrieve and generate a response based on the memory system.

get_user_profile() -> dict

Get the user profile inferred from conversation history.

MCP mode (MemoryOS-MCP server tools)

add_memory(user_input: str, agent_response: str) -> None

Store a conversation pair in the memory system.

retrieve_memory(query: str) -> list

Retrieve relevant past conversations, preferences, and knowledge.

get_user_profile() -> dict

Return the analyzed user profile.